4 minutes

Home Lab Journey Part 02

The first post of this series ended with having all the necessary infrastructure (VMs) deployed to run a full Kubernetes cluster in a Proxmox hypervisor. As I said I would take the k8s cluster for granted, so in this one we will provision applications into that cluster.

Cilium

One of the first things that you encounder in the computer’s world is that nothing is working without proper communication. Bare metal installation of kubernetes comes without a Container Network Inteface (CNI) and you have to install and configure one for yourself. Personally I found the choice of the CNI to be the harderst one, but I went with Cilium because it has support for ARP and Layer-2 Load Balancing announcement mechanisms. This makes easy to expose applications without the need of extra stuff (eg MetalLB).

Installation

To install Cilium and the Cilium CLI just follow the official documentation. I used Helm for the installation, so I started by adding the official repository.

helm repo add cilium https://helm.cilium.io/

Then install Cilium CLI so you can configure and monitor the application:

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

As I mentioned earlier, the reason of choosing Cilium is the ability to use IPAM and Layer-2 announcements in order for the Services to get IP addresses and also be advertised into the LAN network. That way, we could easily expose k8s services and achieve connectivity.

To do so, Cilium needs to be installed with some features enabled and spefically replacing the default kubeProxy. Change the IP address and port of k8s API server and execute the below commands to install Cilium with all the necessary components.

export API_SERVER_IP=192.168.x.x

export API_SERVER_PORT=6443

helm upgrade cilium cilium/cilium --version 1.17.1 \

--namespace kube-system \

--reuse-values \

--set l2announcements.enabled=true \

--set k8sClientRateLimit.qps={QPS} \

--set k8sClientRateLimit.burst={BURST} \

--set kubeProxyReplacement=true \

--set k8sServiceHost=${API_SERVER_IP} \

--set k8sServicePort=${API_SERVER_PORT}

Finally check that everything is healthy by using:

cilium status --wait

After Cilium finishes installation, if you check your k8s cluster, the nodes should be in Ready state.

kubectl get nodes

Again, following the official documentation of Cilium is the proper way to move forward.

Configuration

Now that Cilium is installed, we need to provision a couple of things to achieve proper IP addressing and ARP advertising. First we need a Layer-2 Policy to speficy the k8s nodes that will reply to ARP requests and which interfaces to be used.

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumL2AnnouncementPolicy

metadata:

name: home-lab-l2-policy

spec:

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: DoesNotExist

interfaces:

- ^eth[0-9]+

externalIPs: true

loadBalancerIPs: true

And of cource the IP address pool. Cilium then is going to allocate IP addresses from that pool to different external services. This is specific to each environment, but let’s say I used 10-15 addresses of an internal RFC 1918 address space.

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "home-lab-lb-ip-pool"

spec:

blocks:

- start: 192.168.x.x

stop: 192.168.x.x

We will run a test Nginx Pod and expose it through a LoadBalancer service, in order to check that everything works as expected.

kubectl run nginx-test --image nginx --restart Never --port 80

kubectl expose pod nginx-test --target-port 80 --port 80 --type LoadBalancer

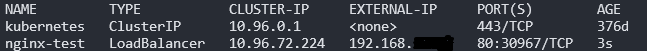

Then if you check the nginx-test service you’ll notice it has an external IP associated, taken from the above IP pool. You can also curl it to verify.

Ingress

Networking is there, so the next logical step is to deploy an Ingress controller so we can make the network services available through a protocol-aware mechanism.

Note that you can install Cilium ingress controller or one of the plethora out there. I chose nginx which is maintained by the kubernetes project.

Just follow the docs and install it with:

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

Now the cluster is ready to receive connections that are aware of paths, hostnames, URIs, etc.

Summary

In the previous post we had just VMs, now we have a working Kubernetes cluster with connectivity. Great, we can move forward to deploy applications! In the next one I will explore how to keep everything up to date with GitOps. Until then, have fun!