5 minutes

Kubernetes Citrix Ingress Controller

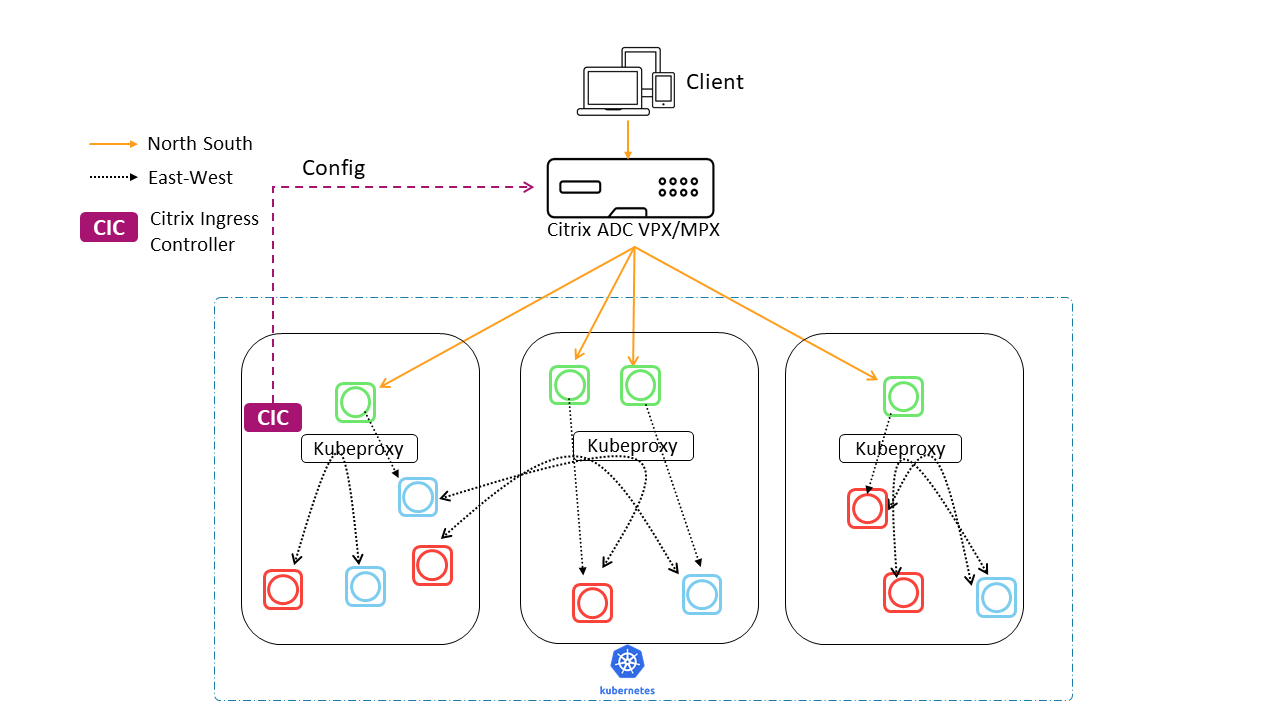

I’ll try to explain (and keep notes) on how to publish Kubernetes services to the outside world through a Citrix ADC (Netscaler) with Citrix Ingress Controller. The deployment will look as follows and you can find more info at the official Citrix documentation.

Prerequisites

As you can understand you need a working Kubernetes cluster (most preferable on-prem installation) and a Citrix ADC VPX/MPX to play with. I did this lab with a 3-node (1 master & 2 workers) Kubernetes cluster (v1.20.1) and a Citrix ADC VPX (v13.1.17) deployed on VMWare Esxi. Also, you can find the relevant manifests on the github page of the citrix-ingress-controller.

Ingress Controller RBAC

First we gonna deploy to the default namespace the ClusterRole, ClusterRoleBinding and ServiceAccount for the necessary permissions that ingress controller needs so it can read the Kubernetes resources. The cic-rbac.yaml file will look something like:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cic-k8s-role

rules:

- apiGroups: [""]

resources: ["endpoints", "pods", "secrets", "nodes", "routes", "namespaces", "configmaps", "services"]

verbs: ["get", "list", "watch"]

# services/status is needed to update the loadbalancer IP in service status for integrating

# service of type LoadBalancer with external-dns

- apiGroups: [""]

resources: ["services/status"]

verbs: ["patch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create"]

- apiGroups: ["extensions"]

resources: ["ingresses", "ingresses/status"]

verbs: ["get", "list", "watch", "patch"]

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses", "ingresses/status", "ingressclasses"]

verbs: ["get", "list", "watch", "patch"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["get", "list", "watch"]

- apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "list", "watch"]

- apiGroups: ["citrix.com"]

resources: ["rewritepolicies", "authpolicies", "ratelimits", "listeners", "httproutes", "continuousdeployments", "apigatewaypolicies", "wafs", "bots", "corspolicies"]

verbs: ["get", "list", "watch", "create", "delete", "patch"]

- apiGroups: ["citrix.com"]

resources: ["rewritepolicies/status", "continuousdeployments/status", "authpolicies/status", "ratelimits/status", "listeners/status", "httproutes/status", "wafs/status", "apigatewaypolicies/status", "bots/status", "corspolicies/status"]

verbs: ["patch"]

- apiGroups: ["citrix.com"]

resources: ["vips"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: ["route.openshift.io"]

resources: ["routes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["config.openshift.io"]

resources: ["networks"]

verbs: ["get", "list"]

- apiGroups: ["network.openshift.io"]

resources: ["hostsubnets"]

verbs: ["get", "list", "watch"]

- apiGroups: ["crd.projectcalico.org"]

resources: ["ipamblocks"]

verbs: ["get", "list", "watch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cic-k8s-role

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cic-k8s-role

subjects:

- kind: ServiceAccount

name: cic-k8s-role

namespace: default

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cic-k8s-role

namespace: default

Now let’s deploy it with following command:

kubectl apply -f cic-rbac.yaml

Create a secret for the Citrix-ADC login

We will create a Secret resource named nslogin, so ingress controller can get the login credentials for the Netscaler.

kubectl create secret generic nslogin --from-literal=username='nsroot' --from-literal=password='nsroot'

Ingress Controller Deployment

Then we will apply the deployment for the ingress controller, in order to spin up the cic Pod. You have to change the management IP of the Netscaler at the “NS_IP” env. In my environment the cic-deployment.yaml file looks like the following:

apiVersion: apps/v1

kind: Deployment

metadata:

name: cic-k8s-ingress-controller

spec:

selector:

matchLabels:

app: cic-k8s-ingress-controller

replicas: 1

template:

metadata:

name: cic-k8s-ingress-controller

labels:

app: cic-k8s-ingress-controller

annotations:

spec:

serviceAccountName: cic-k8s-role

containers:

- name: cic-k8s-ingress-controller

image: "quay.io/citrix/citrix-k8s-ingress-controller:1.23.10"

env:

# Set NetScaler NSIP/SNIP, SNIP in case of HA (mgmt has to be enabled)

- name: "NS_IP"

value: <NETSCALER-MGMT-IP>

# Set username for Nitro

- name: "NS_USER"

valueFrom:

secretKeyRef:

name: nslogin

key: username

- name: "LOGLEVEL"

value: "INFO"

# Set user password for Nitro

- name: "NS_PASSWORD"

valueFrom:

secretKeyRef:

name: nslogin

key: password

# Set log level

- name: "EULA"

value: "yes"

args:

- --ingress-classes

citrix

- --feature-node-watch

false

imagePullPolicy: Always

To deploy it run:

kubectl apply -f cic-deployment.yaml

Keep note of the “ingress-class” arg, it has “citrix” value and we will use this value at the ingress manifests to instruct Kubernetes to use the citrix-ingress-controller

Now if you run a kubectl get pods command, you will see the citrix-ingress-controller pod creating.

Nginx web server example

We will deploy a Nginx deployment and a NodePort service to use it as a backend pool in order to test the ingress part. The full deployment file to apply is the following.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.21.6

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

app: nginx

Ingress resource / instructions

Now it is time to deploy the ingress manifest with instructions for the citrix ingress controller. Then the controller will translate these in Citrix ADC configuration and is going to configure our Citrix ADC Loab Balancer. Take a look that we are using the citrix class, as we configured our ingress-controller deployment. We also use some citrix specific annotations for the Netscaler’s configuration.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: citrix

ingress.citrix.com/frontend-ip: <FRONTEND-IP-ON-CITRIX-ADC>

ingress.citrix.com/insecure-port: "80"

ingress.citrix.com/lbvserver: '{"nginx-service":{"lbmethod":"LEASTCONNECTION", "persistenceType":"SOURCEIP"}}'

ingress.citrix.com/monitor: '{"nginx-service":{"type":"http"}}'

spec:

rules:

- host: "www.makis.com"

http:

paths:

- backend:

service:

name: nginx-service

port:

number: 80

path: /

pathType: Prefix

Now, if you check the Citrix ADC you are going to see a Content Switching Virtual server with all the necessary configuration (LB vServer, ServiceGroup etc) for www.makis.com URL, which we specified on the Ingress manifest.

Conclusion

This is the most basic config to start publishing services from an on-prem Kubernetes cluster. It has so many components and flexibility, so i suggest to start reading first the basic Kubernetes stuff and how services “talk” with each other before running a lab :)